It’s not every day that you stumble across a fundamentally new form of storytelling, but that’s exactly what’s happened in the last few months thanks to a confluence of exciting new technologies and some smart, motivated people working in the so-called field of “virtual reality.”

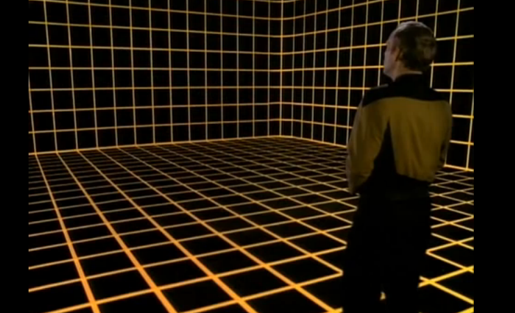

But before I continue, I just want to warn you that everything you’re about to hear and see will sound absolutely ridiculous, almost as if it was dreamed up for a science fiction story or lifted from Star Trek. And in a sense, as with most new technologies, it was — but science fiction has now become science fact.

What I’m about to show you is how the “Holodeck” experience first popularized by Star Trek the Next Generation, and also explored by novelists William Gibson and Neil Stephenson, is starting to become real. It’s set up in my office right now, and also about 10 miles west of me in the empty barn, affectionately referred to as the “Holobarn,” of my creative hacker friend Lorne Covington who operates under the banner of NoirFlux.

This video from my class at the S.I. Newhouse School, New Tech for New Media, shows a little of what’s possible.

What you will see in the video is three distinct technologies: 1) An Oculus Rift VR headset that generates a 3D image, 2) A Kinect depth camera that monitors your movement in 3-dimensional space, and 3) a graphics PC that synthesizes all of these inputs and outputs the experience wirelessly to the Rift. There’s also a second depth camera mounted on top of the Rift that allows the tester to see her hands as she moves them around.

In this case, the VVVV environment that Covington has programmed is creating the illusion of a screen floating in the middle of the air inside a house based on an Escher painting. The screen has Google Maps projected onto it, and the student is navigating the map by using iPad-like swipe gestures. (I warned you that this would start to sound ridiculous!)

Turning the classroom into a holodeck

All 16 students in the NTNM class went through this experience, and a few conducted extended labs. Together we have have been thinking about what it means for storytelling in the future — if you can even call it that. It’s more like story-experiencing, or what VR pioneer Nonny de la Peña refers to as Immersive Journalism. When you work with VR you quickly start to realize that there’s no vocabulary to describe some of these concepts.

Using the Unity3D gaming engine, we’re now experimenting with creating virtual worlds that people can walk through — like this city in a canyon that I arranged myself in a couple of hours.

We have found that once you get over a small learning curve, it’s surprisingly easy to create rich environments using 3D models in the Unity Asset store, then let people explore them. Some of my students are taking the extra steps to do simple usability testing to get a sense of how people respond to these types of immersive experiences.

One student recently sent people through a virtual world and asked for feedback on what it was like, which you can read about in the lab report. What I find most interesting is how universally positive and excited people are after entering these environments — not the usual response to new technologies. Some remark that they could stay in it forever, and they only come out after they feel nauseus — an effect that is more likely when using the Rift from a chair. When you walk around in the “Holodeck” configuration which uses your physical location in the room for navigation, motion sickness is less common. Your mind receives the signals of movement that it expects based on the feedback from your eyes.

What will you put in your Holodeck?

I predict that we will soon start seeing similar Holodecks all over the place. That’s because it’s not a single product you buy off the shelf. It’s made up of a bunch of different technologies, most under $400 and many open-source, that are combined to create immersive experiences. With enough tinkering and patience anyone can do this for $2,000 or less depending on what other computing equipment they have lying around.

While there are many implications for this technology, as the Newhouse School’s Horvitz Chair of Journalism Innovation my focus is on journalism and storytelling. What kinds of stories and experiences can we “tell” — or “transfer” — to help people understand complex ideas by letting them walkin through and manipulate them? The possibilities are endless. Here are just a few ideas I’ve been contemplating over the last few months:

- Experience the effects of climate change: It’s one thing to read about what rising ocean levels mean for coastal areas, and quite another to be standing in New York City, London or New Orleans, dial in a date and climate study, and see if you’re underwater.

- Science and medicine: When the next big cancer drug comes out, you could walk inside a cell and look around as the drug does its work on cellular DNA. Heard about that new planet NASA found? Plug in your goggles and go there.

- Visualizing data: Data visualization, much driven by the data-driven documents codebase, has been a big trend in journalism lately. A next logical step is to create 3-dimensional, immersive visualizations that you can fly through and manipulate using your hands and entire body.

Over the next year I plan to spend time with media companies exploring some of these ideas through my new FutureForecast Consulting business. I also hope to host a panel and workshop about it at the Online News Association conference in September along with Noirflux, Nonny de la Peña and some others.

And speaking of De la Peña, she is already working on her third immersive documentary using one of the prototypes used by Palmer Luckey before launching the Oculus Rift. Here’s a video of her second piece, Project Syria.

Going beyond virtual reality

Perhaps you’re thinking, OK Pacheco, I get it. Sounds like a gaming platform. How does that relate to journalism? And this is where things start to get really trippy. Are you ready?

In the not too distant future, I’m convinced that it will be possible — maybe even commonplace — to inject 3D information that is either scanned or inferred from the physical world into a Holodeck environment. At this point, the term “virtual” doesn’t really do it justice. It may feel more like transferred reality, or what Fast Company writer Rebecca Greenfield calls 3D Transportation.

The pieces to do this are all available now, such as:

- 3D scanning drones like SenseFly are scanning mountains and mining sites as we speak. What happens when this technology costs not $40,000, but $400? It will happen.

- A company called Replay Technologies can create Matrix-like instant replays using data captured from 4K cameras all around the stadium. Here’s a video of the replay, and here’s more about how it works. What happens when such systems can be placed anywhere, perhaps even carried by drones? Then the 4K arena can be taken anywhere — such as the site of a recent earthquake or a large outdoor event — and people can virtually teleport in from home.

- Small handheld scanners like Google’s Project Tango or Structure.io will make it possible to scan places as you visit them, then export what you scanned as 3D object files.

- And finally, craziest of all, data from 2-dimensional photos can now be combined to extrapolate realistic 3D objects. Cornell Professor Noah Snavely recently used randomly posted photos from Flickr to create a 3D point-cloud reconstruction of Dubrovnik. And another creative hacker has created a way to see 3D point clouds using data from Google’s Street View.

Based on this, what’s to stop someone (maybe me!) from going to a place like Machu Picchu, scanning the inside and outside of every building, scanning it from a drone above, then sending you a link so you can virtually go to Machu Picchu from your Oculus Rift?

This is the point at which you Trekkies out there can confidently say, “Computer, Arch. Take me to Machu Picchu circa early 21st century earth.” It sounds like science fiction or magic, but the technology is available to do all of this now. As Arthur C. Clarke so eloquently put it, “Any sufficiently advanced technology is indistinguishable from magic.”

Collective community visioning

On a final note, I have been thinking about how advances in 3D scanning, visualization and printing can be combined to better engage and inform communities around building projects. In the spirit of throwing spaghetti on the wall to see if it sticks, I’ve thrown this idea out there under the banner of Visualize Cities. I’m already talking logistics with stakeholders across Syracuse University, including at the School of Architecture and College of Visual and Performing Arts. The next step is to find some community partners. If you’re also interested, fill out the form on the page and I’ll get in touch.

Dan Pacheco is the Chair of Journalism Innovation at the S.I. Newhouse School. He worked at Washingtonpost.com as one of the first online producers in 1996, and on Digital Ink, The Washington Post’s first online service before the introduction of the consumer Internet.

This post originally appeared on Journovation Central.

Dan Pacheco is the Peter A. Horvitz Chair in Journalism Innovation at the S.I. Newhouse School at Syracuse University. Currently a professor teaching entrepreneurial journalism and innovation, he is also an active entrepreneur and CEO of BookBrewer, which he co-founded. He’s previously worked as a reporter (Denver Post), online producer (Washingtonpost.com, founding producer), community product manager (AOL) and news product manager (The Bakersfield Californian). His work has garnered numerous awards, including two Knight-Batten Awards for Innovation in Journalism, a Knight News Challenge award in 2007 (Printcasting) and an NAA 20 Under 40 award (Bakotopia). At last count, he’d launched 24 major digital initiatives centered around community publishing, user participation or social networking. Pacheco is a proponent of constant innovation and reinvention for both individuals and industries. He believes future historians will see today as the golden age of digital journalism, and that its impact will overshadow current turmoil in legacy media.

Hi,

Really interesting topic, first of all.

As a filmmaking student, I’am really intrigued by these new technology.

Sure, it’ll enable a new / different type of storytelling. We know, its not about submitting a story to a subject anymore, but let the subject being aware of the story in real-time, I mean explore the story as he is in it / personally living it.

Remembered me, also those books, in which the reader is the actual hero of the story, some choices / actions bring him to different pages, instead to just been the next one.

My point here, is the subject is totally free of choice, but the common sense tell us, that too many choices is equal to no choices at all.

So how do you Lead the subject to the story you actually want to tell.

The sound? Through the help of 3D microphone

The Climat? Through support and integration of weather effect component ( what if you can fake a sand storm and make it visually believable)

The motion? By tricking your brain. Through action, let it believe you jumping from the 15th floor of a building or Fly above the atmosphere.

My main reflection it is do you visually tell a story Of it rather clever to write a story within digital feeling?

Glad you see the potential. There are many, many different opportunities here including non-fiction and fiction storytelling. I’m not sure that “storytelling” is even the right word for this. It’s more about putting someone into an experience.

The “virtual” in “virtual reality” also starts to lose meaning when you think about the possibility of scanning and importing objects and environments from the real world. In that case it would be more like time-shifted telepresence.

Exciting times ahead!

Big times ahead, there is no doubt!

Its clear, that entertainment industry will drive the development of most VR technology going forward.

The big challenges is keep the subject fully immersed inside the Environment. Truly immersive experience is a key that make the subject forget his real surrounding. In order to let him totally experience the narrative, because as long as the subject is aware of the device , he is not truly immersed.

Which is, in my opinion key point most of all in immersive fiction storytelling / story-experience. If the subject can moves seamlessly inside the Environment, it can be called interactive experience.

As we know, poorly interaction can drastically reduce the sense of immersion. The idea behind all that it is to finding ways to engage the subject, as it can increase his immersion, am I right, so far?

If so, the viewing experience completely change, just as the storytelling, because we give the subject agents, which allows him to fully experience the story in his own and unique way, which amazing but at least a bit tricky for fiction writers, I think so far.

Just like we learn with the web, too much choices is equal to none at all. So could it be an idea, to make the audience only as eyewitness to an unfolding drama by providing a powerful embodied experience, where the user will be a ‘passive’ third character inside the story where external character set up in the story interact with and leads the story as if the user was deaf and mute personage. Or is it better to let the subject makes his own actions, put him as the actual protagonist of the story within external characters, will react to his actions / choices along the narrative?

the trap is how to make story from ‘different starting’ points, without shifted the story itself?

Good points. The wonderful thing about this technology right now is that there are no rules, so people should try everything you mention and then some.

Nonny is the pioneer in “passive” immersive stories where the subject can’t change the scene. That’s one approach, but not the only one. It really depends on your objectives.

If the objective is to let someone experience something that actually happened, you don’t want the experiencer to be able to impact the scene. But if the objective is to understand a concept — such as is the case with a science, medical or environmental story, or any kind of data visualization — it might make sense for the subject to be able to change the VR environment.

I really admire the work of Nonny de la pena, the concept behind project syria is amazing !

She’s like the one the Lumière brothers, in my own opinion. I am sure she is also aware, that creating new rules for a new media isn’t that simple even though there no limitations within those new technology (except the developer himself) .

Which bring us to Andre Bazin, who used to say :

“C’est quoi le cinema?! / What is cinema ?! ”

Some of us already proclaim the death of cinema in the eighties, when the control remote appeared because cinema become interactive.

Years later, the growing of technology has become so fast, that the term “communication” even change meanings.

I believe also, every rules that have been created by ‘traditional’ cinema pioneer has to be re-write, such as the classic eyes-camera which is obsolete with such technology, as the viewer need to be INSIDE.

I still such thinking as the “new cinema” or living scenario/cinema as I named it will not be such as cinema as we know,for sure. Because it’d could traumatic to embodied a character for more than 2 hours, and then go back to the real Real Life. So Living cinema is all about re-watching over and over the same content until the audience get the full meaning of it, almost like in science.

Does that means, shorter stories? youtube/vimeo format? Online distribution?

I have the feeling, that we are in a prologue of Strange days written by James Cameron and Jay Cocks.

I looking forward to find people will help to clarify those news rules, new sense engagement, new way to critics such a media.