Algorithm journalism is now available for everyone.

A beta version of Wordsmith, a program that creates journalism from data, was made available by Automated Insights on its website in October, the company announced.

But one of the world’s largest news organizations already uses the software to automatically generate some stories – and its standards editor said the ethics of the software has to be carefully considered.

“We want to make sure that we’re doing everything the way we should,” Associated Press standards editor Tom Kent said. “We take our ethics very seriously.”

During the past year, the AP multiplied its publication of earnings reports tenfold. The number of earning reports on a quarterly from the AP increased from 300 stories to nearly 3,000.

The mass quantity of stories that the software can produce has led to the moniker “robot journalism,” but Kent said questions have turned from the capacity of robot journalism to the ethics of this new production tool.

Photo by Arthur Caranta and used here with Creative Commons license.

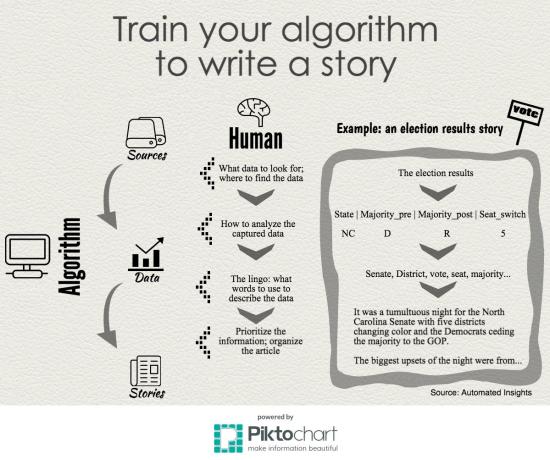

“Robot journalism” is less a CP3O-like character typing the news, and more automation of data collection using algorithms to produce text news for publication. The process is forcing journalists like Kent to address best practices for gathering data, incorporating news judgment in algorithms and communicating these new efforts to audiences. The AP announced its partnership with Automated Insights, a Durham, North Carolina-based software company that uses algorithms to generate short stories, in June 2014.

Collecting Data

Accuracy is just one concern when it comes to algorithmically gathering data.

According to a January press release from Automated Insights, automated stories contain “far fewer errors than their manual counterparts” because such programs use algorithms to comb data feeds for facts and key trends while combining them with historical data and other contextual information to form narrative sentences.

New York Magazine writer Kevin Roose hypothesized similarly saying, “The information in our stories will be more accurate, since it will come directly from data feeds and not from human copying and pasting, and we’ll have to issue fewer corrections for messing things up.”

When errors do surface, the process for editing is more or less the same as for human writers: review, revise and repeat. Once editors catch algorithmic mishaps, developers make the changes to the code to ensure it doesn’t happen again.

“We spent close to a year putting stories through algorithms and seeing how they came out and making adjustments,” Kent said.

He also adds that a less obvious concern may have less to do with what data is collected and more to do with how it is collected.

“Data itself may be copyrighted,” Kent said. “Just because the information you scrape off the Internet may be accurate, it doesn’t necessarily mean that you have the right to integrate it into the automated stories that you’re a creating – at least without credit and permission.”

Synthesizing and Structuring Data

Besides collecting data, the way in which algorithms organize information requires additional ethical consideration.

“To make the article sound natural, [the algorithm] has to know the lingo,” BBC reporter Stephen Beckett wrote in a September article. “Each type of story, from finance to sport, has its own vocabulary and style.”

Stylistic techniques like lingo must be programmed into the algorithm at human discretion. For this reason, Kent suggests that robot reporting is not necessarily more objective than content produced by humans and is subject to the same objectivity considerations.

“I think the most pressing ethical concern is teaching algorithms how to assess data and how to organize it for the human eye and the human mind,” Kent said. “If you’re creating a series of financial reports, you might program the algorithm to lead with earnings per share. You might program it to lead with total sales or lead with net income. But all of those decisions are subject – as any journalistic decision is – to criticism.”

Since news judgement and organization are ethical questions that carry over from traditional reporting to robot journalism, Kent’s suggestion is to combat them in the same way.

“Everyone has a different idea about what fair reporting is,” he said. “The important thing is that you devote to your news decisions on automated news the same amount of effort you devote to your ethics and objectivity decisions at any other kind of news.”

Sharing Data

Finally, journalists must make decisions about the extent to which they engage audience members in the process of robot journalism.

University of Wisconsin-Madison journalism Prof. Lucas Graves, whose research focuses on new organizations and practices in the emerging news ecosystem, says disclosure is a must.

“I absolutely think outlets should be disclosing the use of algorithms,” he said. “If they aren’t, then they need to be asking themselves why.”

Kent echoes similar sentiments and goes even further in his Medium piece suggesting that outlets provide a link identifying the source of the data, the company that provides the automation and explain how the process works.

As algorithms develop and become more complicated into the future, Kent also advises that journalists remain diligent in both understanding how algorithms work, as well as how they interact with journalism ethics.

“As complicated as the algorithm gets, you have to document it carefully so that you always understand why it did what it did,” he said. “Just as any journalist who covers a particularly area should periodically reevaluate how she writes about the topic, we should always reevaluate algorithms. There is nothing about automated journalism that makes it less important to pay attention to ethics and fairness.”

![]() This piece originally appeared on the University of Wisconsin’s Center for Journalism Ethics website.

This piece originally appeared on the University of Wisconsin’s Center for Journalism Ethics website.

Meagan Doll is a senior at the University of Wisconsin-Madison, studying journalism. She is an intern for the EducationShift section at MediaShift and an undergraduate fellow at the University of Wisconsin-Madison Center for Journalism Ethics where this post originally appeared.